Security advisory: Potential Use-After-Free issue in Qt for WebAssembly’s implementation of QNetworkReply

A recently reported potential Use-After-Free issue in Qt’s wasm implementation of QNetworkReply has been assigned the CVE id CVE-2024-30161.

Key Takeaways from 2024 Embedded World

With the 2024 Embedded World Exhibition & Conference in the rear view, here are some key impressions from the ICS team around the future of embedded development.

Qt for MCUs 2.5.3 LTS Released

Recursive Instantiation with Qt Quick and JSON

Recently I was tasked to come up with an architecture for remote real time instantiation and updating of arbitrary QML components.

This entry shows how you can use a simple variation of the factory method pattern in QML for instantiating arbitrary components. I’ve split my findings into 3 blog entries, each one covering a slightly different topic. Part 1 focuses on the software design pattern used to dynamically instantiate components. Part 2 shows how to layout these dynamic components by incorporating QML’ s positioning and layout APIs. The last entry, consisting of Parts 3 and 4, addresses the anchors API and important safety aspects.

This is Part 1: Recursive Instantiation with Qt Quick and JSON.

The original factory method pattern made use of static methods to programmatically instantiate objects of different classes, instead of having to call their constructors. It achieved that by having the classes share a common ancestor. Our variation of the popular pattern uses a Loader to choose which component to load, and a Repeater to dynamically instantiate arbitrary instances of this loader using a model.

Here we specify which components with a JSON array and use a Repeater to load them.

id: root

// A JSON representation of a QML layout:

property var factoryModel: [

{

"component": "Button",

},

{

"component": "Button",

}

]

// Root of our component factory

Repeater {

model: root.factoryModel

delegate: loaderComp

}

To be able to instantiate any kind of item, you can use a Component with a Loader inside, as the Repeater’s delegate. This allows you to load a different component based on the Repeater’s model data.

// Root component of the factory and nodes

Component {

id: loaderComp

Loader {

id: instantiator

required property var modelData

sourceComponent: switch (modelData.component) {

case "Button":

return buttonComp;

case "RowLayout":

return rowLayoutComp;

case "Item":

default: return itemComp;

}

}

}

To assign values from the model to the component, add a method that gets called when the Loader’s onItemChanged event is triggered. I use this method to take care of anything that involves the component’s properties:

// Root component of the factory and nodes

Component {

id: loaderComp

Loader {

id: instantiator

required property var modelData

sourceComponent: switch (modelData.component) {

case "Button":

return buttonComp;

case "RowLayout":

return rowLayoutComp;

case "Item":

default: return itemComp;

}

onItemChanged: {

// Pass children (see explanation below)

if (typeof(modelData.children) === "object")

item.model = modelData.children;

// Button properties

switch (modelData.component) {

case "Button":

// If the model contains certain value, we may assign it:

if (typeof(modelData.text) !== "undefined")

item.text = modelData.text;

break;

}

// Item properties

// Since Item is the parent of all repeatable, we don't need to check

// if the component supports Item properties before we assign them:

if (typeof(modelData.x) !== "undefined")

loaderComp.x = Number(modelData.x);

if (typeof(modelData.y) !== "undefined")

loaderComp.y = Number(modelData.y);

// ...

}

}

}

Examples of components that loaderComp could load are defined below. To enable recursion, these components must contain a Repeater that instantiates children components, with loaderComp set as the delegate:

Component {

id: itemComp

Item {

property alias children: itemRepeater.model

children: Repeater {

id: itemRepeater

delegate: loaderComp

}

}

}

Component {

id: buttonComp

Button {

property alias children: itemRepeater.model

children: Repeater {

id: itemRepeater

delegate: loaderComp

}

}

}

Component {

id: rowLayoutComp

RowLayout {

property alias children: itemRepeater.model

children: Repeater {

id: itemRepeater

delegate: loaderComp

}

}

}

The Repeater inside of the components allows us to instantiate components recursively, by having a branch or more of children components in the model, like so:

// This model lays out buttons vertically

property var factoryModel: [

{

"component": "RowLayout",

"children": [

{

"component": "Button",

"text": "Button 1"

},

{

"component": "Button",

"text": "Button 2"

}

]

}

]

Here we’ve seen how we can use a Repeater, a JSON model, a Loader delegate, and simple recursive definition to instantiate arbitrary QML objects from a JSON description. In my next entry I will focus on how you can lay out these arbitrarily instantiated objects on your screen.

Thanks to Kevin Krammer and Jan Marker whose insights helped improve the code you’ve seen here.

I hope you’ve found this useful! Part 2 may be found already or later by following this link.

Reference

- To see a real world application of this factory design pattern in action, watch “QML for building beautiful desktop apps | Dev/Des 2021”, by Prashanth N. Udupa https://www.youtube.com/watch?v=l2nC-onPGQs

If you like this article and want to read similar material, consider subscribing via our RSS feed.

Subscribe to KDAB TV for similar informative short video content.

KDAB provides market leading software consulting and development services and training in Qt, C++ and 3D/OpenGL. Contact us.

The post Recursive Instantiation with Qt Quick and JSON appeared first on KDAB.

Qt Project: Top Contributors of 2023!

So, 2023 was a successful year for Qt - and we highlight the Qt 6.5 and Qt 6.6 releases, Qt World Summit, and Qt Contributor Summit.

Our community stays vibrant and engaged with the Qt Project through our forums, mailings lists, asking and answering questions, offering technical advice, reporting bugs, contributing patches, and in many other forms helping Qt flourish.

Insights from Industry Experts: Improving Software Dev Productivity

Software development is a dynamic and constantly evolving field that requires professionals to stay up-to-date with the latest technologies and tools to improve their productivity and efficiency. For developers, the ultimate goal is to complete tasks effectively, leading to better products and faster time-to-market. These topics were explored in our World Summit QtWS23 last November, where experts Kate Gregory, Kevlin Henney, and Volker Hilsheimer shared valuable insights.

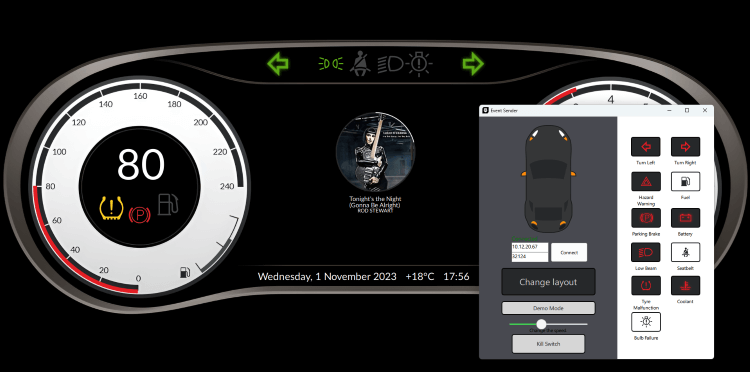

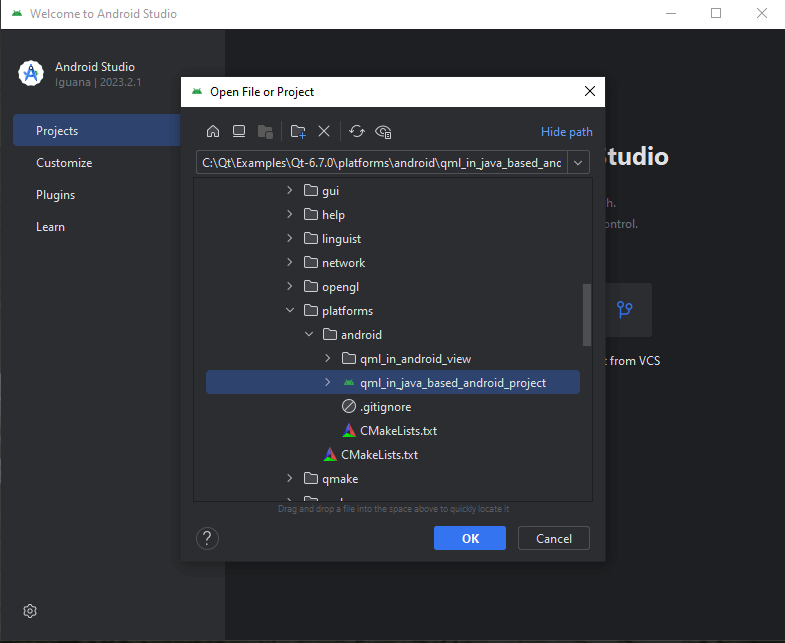

Qt for Android Automotive 6.6.3 is released

The latest release of Qt for Android Automotive (QtAA) is out, based on Qt 6.6.3 with more than 300 bug fixes, security updates, and other improvements to the top of the Qt 6.6.2 release.

Qt for Python release: 6.7 is now available! 🐍

Qt Creator 13 - CMake Update

Here is a set of highlighted CMake features and fixes in Qt Creator 13. Have a look at the ChangeLog for all the CMake changes.

Embedding the Servo Web Engine in Qt

With the Qt WebEngine module, Qt makes it possible to embed a webview component inside an otherwise native application. Under the hood, Qt WebEngine uses the Chromium browser engine, currently the de facto standard engine for such use cases.

While the task of writing a brand new standard-compliant browser engine is infamous as being almost unachievable nowadays (and certainly so with Chromium coming in at 31 million lines of code), the Rust ecosystem has been brewing up a new web rendering engine called Servo. Initially created by Mozilla in 2012, Servo is still being developed today, now under the stewardship of the Linux Foundation.

With the browser inherently being exposed to the internet, it is usually the biggest attack vector on a system. Naturally this makes Servo very attractive as an alternative browser engine, given that it is written in a memory-safe language.

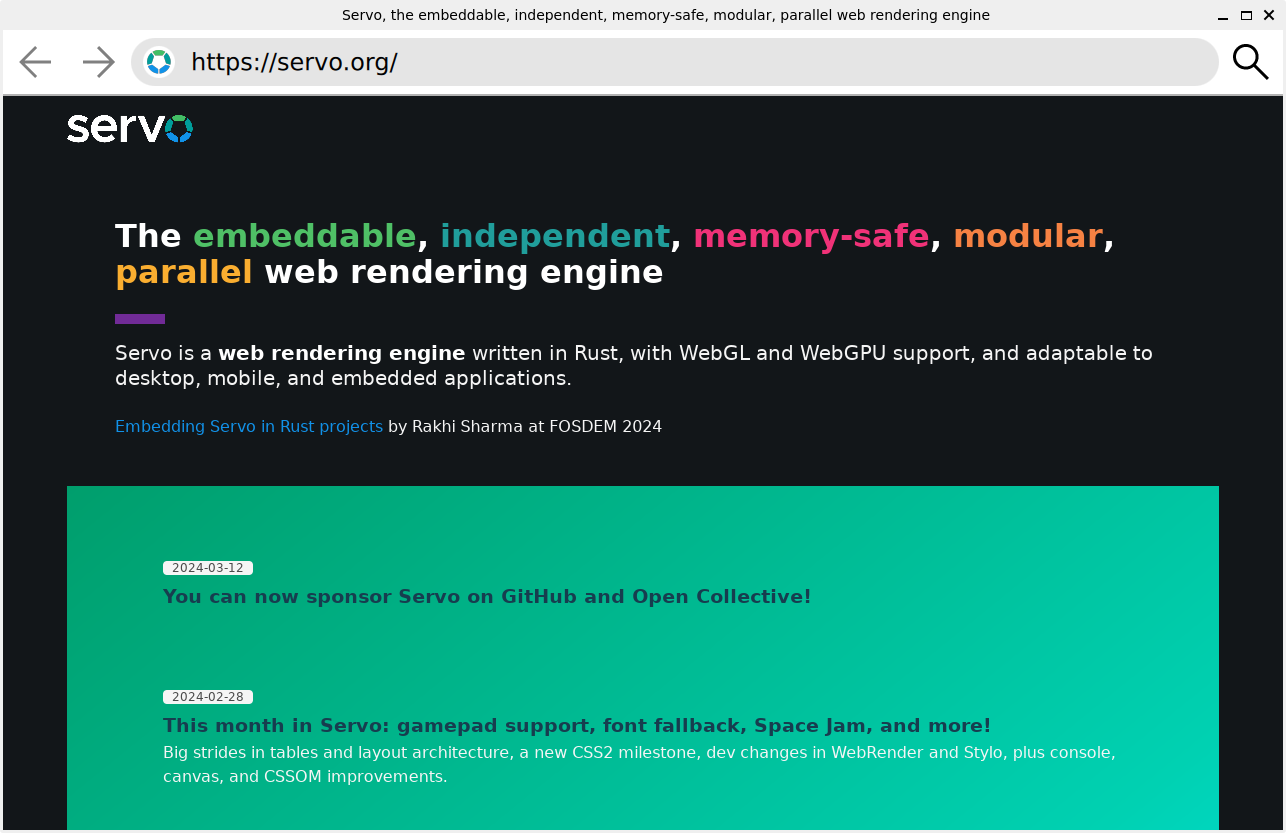

A Servo WebView

At KDAB we managed to embed the Servo web engine inside Qt, by using our CXX-Qt library as a bridge between Rust and C++. This means that we can now use Servo as an alternative to Chromium for webviews in Qt applications.

From a QML perspective this component is similar to the Chromium WebView, such as providing canGoBack, canGoForward, loading, title, url properties and goBack, goForward methods. The QML item itself acts in the same way with the contents being rendered to match its size.

import QtQuick

import QtQuick.Window

import com.kdab.servo

Window {

height: 720

width: 1280

title: webView.title

visible: true

ServoWebView {

id: webView

anchors.fill: parent

url: "https://servo.org/"

}

}

The screenshot below shows a basic QML application with a toolbar containing back, forward, go buttons and an address bar. We use CXX-Qt to define Qt properties, invokables, and event handlers (e.g. touch events) in Rust and trigger events in the Servo engine. Then any update requests from Servo can trigger an update of the Qt side via the Qt event loop.

As we move towards stabilising CXX-Qt at KDAB, investigating real world use cases, such as exposing Servo to Qt, allows us to identify potential missing functionality and explore what is possible when joining the Rust and Qt ecosystems together.

Technical details

Under the hood most of the heavy lifting is done by our CXX-Qt bindings, which already bridges the obvious gap between the Rust and Qt/C++ worlds. However, some further glue is needed to connect the rendering contexts of Servo to being able to render the surfaces into the actual Qt application. Internally, Servo uses surfman, a Rust library to manage rendering surfaces. At the time of writing, surfman supports OpenGL and Metal, with support for Vulkan being planned.

We use surfman to create a new OpenGL context, that Servo then uses for rendering. To render the result into the QtQuick scene, we borrow the surface from Servo, create a new framebuffer object and blit the framebuffer into a QQuickFrameBufferObject on the Qt side.

Future possibilities

Servo development is active again after a period of less activity, therefore the API is evolving and there is work to improve the API for embedders. This could result in a simpler and documented process for integrating Servo into apps. Also as part of the Tauri and Servo collaboration, a backend for WRY could become available. All of these result in many possible changes for the bridge to Qt, as currently this demo directly constructs Servo components (similar to servoshell) but could instead use a shared library or WRY instead.

On the Qt side, there are areas that could be improved or investigated further. For example, currently we are using a framebuffer object which forces use of the OpenGL backend, but with RHI, developers might want to use other backends. A way to solve this for QML would be to change the implementation to instead use a custom Qt Scene Graph node, which can then have implementations for Vulkan, OpenGL etc and read from the Servo engine.

Alternatively Qt 6.7 has introduced a new QQuickRhiItem element, which is currently a technical preview, but can be used as a rendering API-agnostic alternative to QQuickFrameBufferObject.

If this sounds interesting to your use case or you would like to collaborate with us, the code for this tech demo is available on GitHub under KDABLabs/cxx-qt-servo-webview or contact KDAB directly. We also have a Zulip chat if you want to discuss any parts of bridging Servo or CXX-Qt with us.

Come and see us at Embedded World 2024 where we will have the Servo demo and others on display!

The post Embedding the Servo Web Engine in Qt appeared first on KDAB.

Use Compute Shader in Qt Quick

With this blog post, we introduce the QtQuickComputeItem - a Qt Quick item that allows you to easily integrate compute shader into your Qt Quick Code.

Compute

Shader are used to perform arbitrary computations on the GPU. For

example, the screenshot below shows a Qt Quick application that

generates Gray Scott Reaction Diffusion patterns. The simulation is executed by a compute shader that is configured directly in QML.

Continue reading Use Compute Shader in Qt Quick at basysKom GmbH.

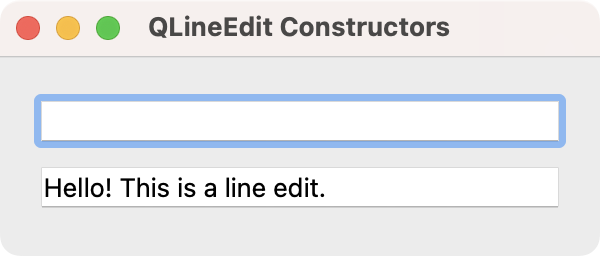

Q&A: How Do I Display Images in PySide6? — Using QLabel to easily add images to your applications

Adding images to your application is a common requirement, whether you're building an image/photo viewer, or just want to add some decoration to your GUI. Unfortunately, because of how this is done in Qt, it can be a little bit tricky to work out at first.

In this short tutorial, we will look at how you can insert an external image into your PySide6 application layout, using both code and Qt Designer.

Which widget to use?

Since you're wanting to insert an image you might be expecting to use a widget named QImage or similar, but that would make a bit too much sense! QImage is actually Qt's image object type, which is used to store the actual image data for use within your application. The widget you use to display an image is QLabel.

The primary use of QLabel is of course to add labels to a UI, but it also has the ability to display an image — or pixmap — instead, covering the entire area of the widget. Below we'll look at how to use QLabel to display a widget in your applications.

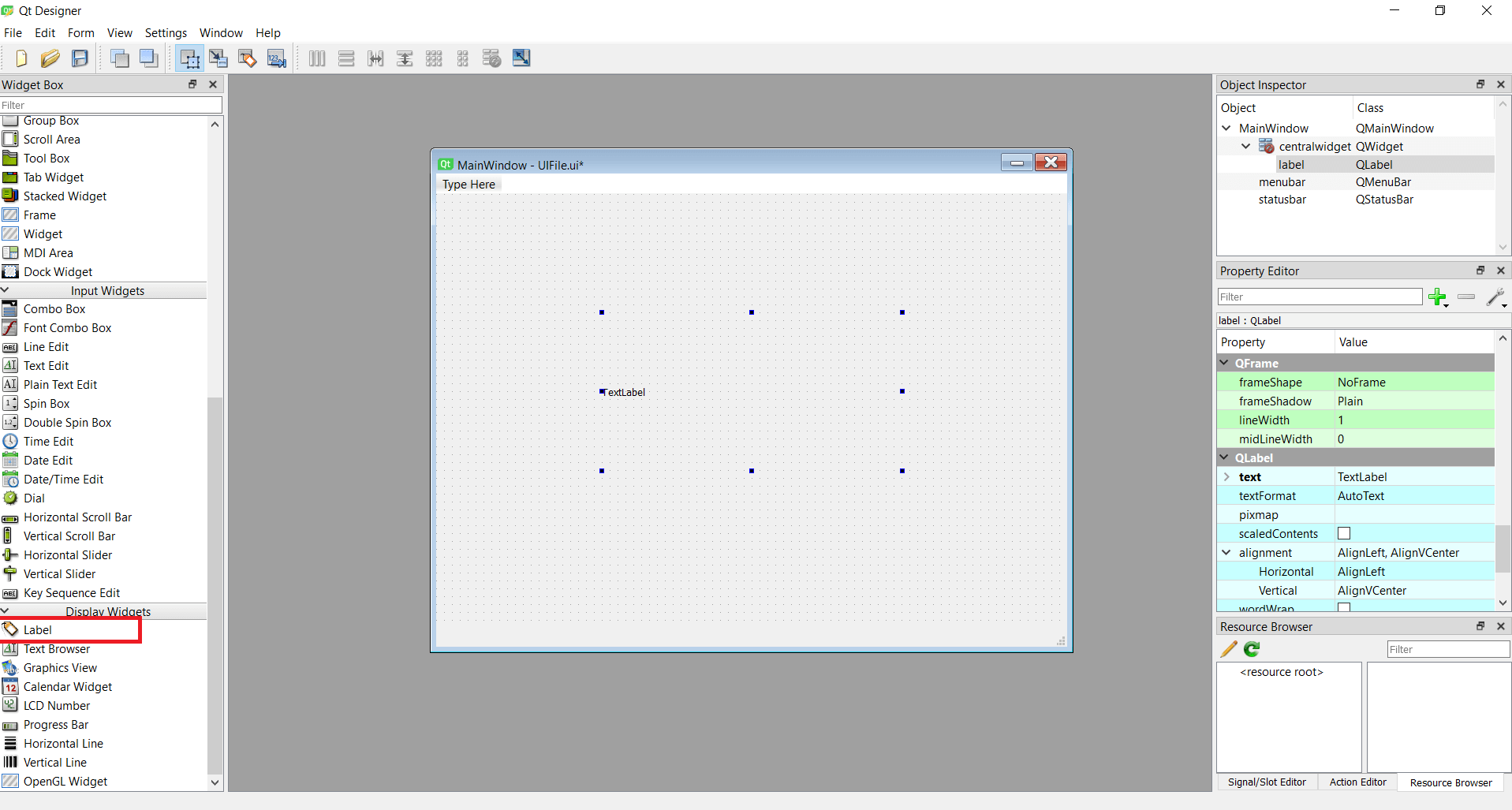

Using Qt Designer

First, create a MainWindow object in Qt Designer and add a "Label" to it. You can find Label at in Display Widgets in the bottom of the left hand panel. Drag this onto the QMainWindow to add it.

MainWindow with a single QLabel added

MainWindow with a single QLabel added

Next, with the Label selected, look in the right hand QLabel properties panel for the pixmap property (scroll down to the blue region). From the property editor dropdown select "Choose File…" and select an image file to insert.

As you can see, the image is inserted, but the image is kept at its original size, cropped to the boundaries of theQLabel box. You need to resize the QLabel to be able to see the entire image.

In the same controls panel, click to enable scaledContents.

When scaledContents is enabled the image is resized to the fit the bounding box of the QLabel widget. This shows the entire image at all times, although it does not respect the aspect ratio of the image if you resize the widget.

You can now save your UI to file (e.g. as mainwindow.ui).

To view the resulting UI, we can use the standard application template below. This loads the .ui file we've created (mainwindow.ui) creates the window and starts up the application.

import sys

from PySide6 import QtWidgets

from PySide6.QtUiTools import QUiLoader

loader = QUiLoader()

app = QtWidgets.QApplication(sys.argv)

window = loader.load("mainwindow.ui", None)

window.show()

app.exec()

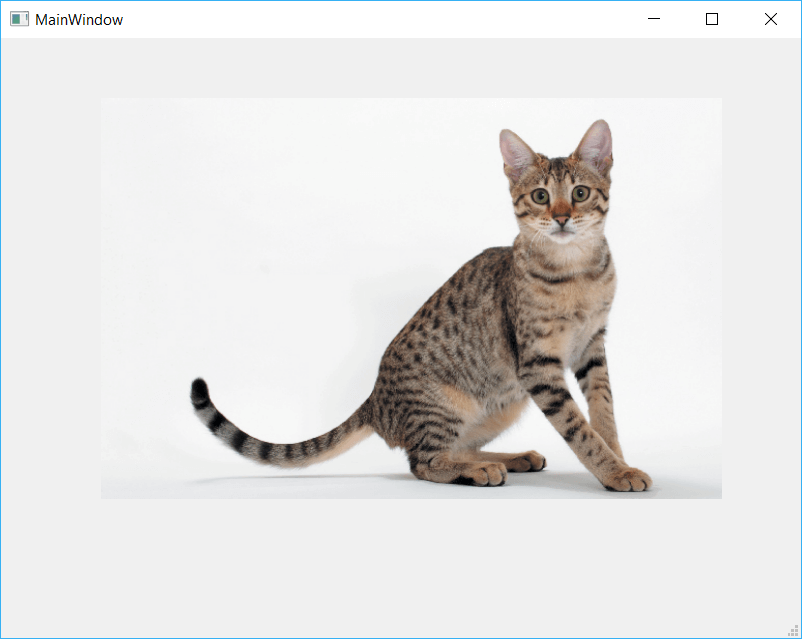

Running the above code will create a window, with the image displayed in the middle.

QtDesigner application showing a Cat

QtDesigner application showing a Cat

Using Code

Instead of using Qt Designer, you might also want to show an image in your application through code. As before we use a QLabel widget and add a pixmap image to it. This is done using the QLabel method .setPixmap(). The full code is shown below.

import sys

from PySide6.QtGui import QPixmap

from PySide6.QtWidgets import QMainWindow, QApplication, QLabel

class MainWindow(QMainWindow):

def __init__(self):

super(MainWindow, self).__init__()

self.title = "Image Viewer"

self.setWindowTitle(self.title)

label = QLabel(self)

pixmap = QPixmap('cat.jpg')

label.setPixmap(pixmap)

self.setCentralWidget(label)

self.resize(pixmap.width(), pixmap.height())

app = QApplication(sys.argv)

w = MainWindow()

w.show()

sys.exit(app.exec())

The block of code below shows the process of creating the QLabel, creating a QPixmap object from our file cat.jpg (passed as a file path), setting this QPixmap onto the QLabel with .setPixmap() and then finally resizing the window to fit the image.

label = QLabel(self)

pixmap = QPixmap('cat.jpg')

label.setPixmap(pixmap)

self.setCentralWidget(label)

self.resize(pixmap.width(), pixmap.height())

Launching this code will show a window with the cat photo displayed and the window sized to the size of the image.

QMainWindow with Cat image displayed

QMainWindow with Cat image displayed

Just as in Qt designer, you can call .setScaledContents(True) on your QLabel image to enable scaled mode, which resizes the image to fit the available space.

label = QLabel(self)

pixmap = QPixmap('cat.jpg')

label.setPixmap(pixmap)

label.setScaledContents(True)

self.setCentralWidget(label)

self.resize(pixmap.width(), pixmap.height())

Notice that you set the scaled state on the QLabel widget and not the image pixmap itself.

Conclusion

In this quick tutorial we've covered how to insert images into your Qt UIs using QLabel both from Qt Designer and directly from PySide6 code.

Introducing the ConnectionEvaluator in KDBindings

Managing the timing and context of signals and slots in multithreaded applications, especially those with a GUI, can be a complex task. The concept of deferred connection evaluation offers a nice and easy API, allowing for controlled and efficient signal-slot connections. This approach is particularly useful when dealing with worker threads and GUI threads.

A classic example where cross-thread signal-slot connections are useful is when we have a worker thread performing some computations or data collection and periodically emits signals. The GUI thread can connect to these signals and then display the data however it wishes. Graphical display usually must happen on the main thread and at specific times. Therefore, controlling exactly when the receiving slots get executed is of critical importance for correctness and performance.

A Quick Recap on Signals & Slots and KDBindings

Signals and slots are integral to event handling in C++ applications. Signals, emitted upon certain events or conditions, trigger connected slots (functions or methods) to respond accordingly. This framework is highly effective, but there are cases where immediate slot invocation is not ideal, such as in multithreaded applications where you might want to emit a signal in one thread and handle it in another, like a GUI event loop.

KDBindings is a header–only C++17 library that implements the signals and slots design pattern. In addition, KDBindings also provides an easy to use property and bindings system to enable reactive programming in C++. For more information, read this introduction blog.

Understanding Deferred Connection Evaluation

In Qt, a signal that should be evaluated on a different thread will be executed by the event loop of that thread. As KDBindings doesn’t have its own event loop and needs to be able to integrate into any framework, we’re introducing the ConnectionEvaluator as our solution to those nuanced requirements. It allows for a deferred evaluation of connections, providing a much-needed flexibility in handling signal emissions.

In multithreaded environments, the immediate execution of slots in response to signals can lead to complications. Deferred connection evaluation addresses this by delaying the execution of a slot until a developer-determined point. This is achieved through a ConnectionEvaluator, akin to a BindingEvaluator, which governs when a slot is called.

Implementing and Utilizing Deferred Connections

We have introduced connectDeferred. This function, mirroring the standard connect method, takes a ConnectionEvaluator as its first argument, followed by the standard signal and slot parameters.

Signals emitted are queued in the ConnectionEvaluator instead of immediately triggering the slot. Developers can then execute these queued slots at an appropriate time, akin to the evaluateAll method in the BindingEvaluator.

How It Works

1. Create a ConnectionEvaluator:

auto evaluator = std::make_shared<ConnectionEvaluator>(); // Shared ownership for proper lifetime management

2. Connect with Deferred Evaluation:

Signal<int> signal;

int value = 0;

auto connection = signal.connectDeferred(evaluator, [&value](int increment) {

value += increment;

});

3. Queue Deferred Connections

When the signal emits, connected slots aren’t executed immediately but are queued:

signal.emit(5); // The slot is queued, not executed.

4. Evaluate Deferred Connections

Execute the queued connections at the desired time:

evaluator.evaluateDeferredConnections(); // Executes the queued slot.

Usage Example

To illustrate how this enhanced signal/slot system works in a multithreaded scenario, let’s have a look at a usage example. Consider a scenario where a worker thread emits signals, but we want the connected slots to be executed in an event loop on the GUI thread:

Signal<int> workerSignal;

int guiValue = 0;

auto evaluator = std::make_shared<ConnectionEvaluator>();

// Connect a slot to the workerSignal with deferred evaluation

workerSignal.connectDeferred(evaluator, [&guiValue](int value) {

// This slot will be executed later in the GUI thread's event loop

guiValue += value;

});

// ... Worker thread emits signals ...

// In the GUI thread's event loop or at the right time

evaluator->evaluateDeferredConnections();

// The connected slot is now executed, and guiValue is updated

In this example, the connectDeferred function allows us to queue the connection for deferred execution, and the ConnectionEvaluator determines when it should be invoked. This level of control enables more sophisticated and responsive applications.

Conclusion

In simple terms, the ConnectionEvaluator is a valuable tool we’ve added to KDBindings. It lets you decide when exactly your connections should ‘wake up’ and do their jobs. This is especially helpful in complex applications where you want to make sure that certain tasks are performed at the right time and in the right order (preferably in multithreading applications). Think of it like having a remote control for your connections, allowing you to press ‘play’ whenever you’re ready.

It may also be worthwhile mentioning that we will be integrating this feature into the KDFoundation library and event loop in the KDUtils GitHub repo. Additionally, it’s important to note that it could easily be integrated into any application or framework, regardless of whether it utilizes an event loop.

If you like this article and want to read similar material, consider subscribing via our RSS feed.

Subscribe to KDAB TV for similar informative short video content.

KDAB provides market leading software consulting and development services and training in Qt, C++ and 3D/OpenGL. Contact us.

The post Introducing the ConnectionEvaluator in KDBindings appeared first on KDAB.

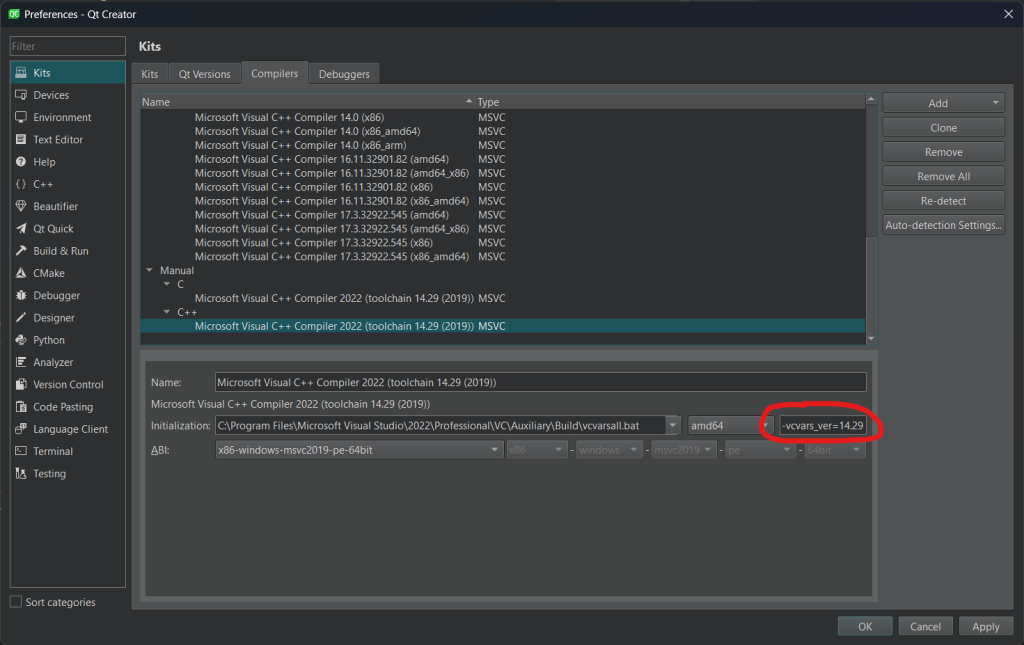

Reducing Visual Studio Installations with Toolchains

If you work on C++ projects on Windows that need to be built with multiple Visual Studio C++ compiler versions, you need some way to manage the installations of all these build environments. Either you have multiple IDEs installed, or you know about build tools (https://aka.ms/vs/17/release/vs_BuildTools.exe) and maybe keep only the latest full VS IDE plus older Build Tools.

However, it turns out that you can have just the latest IDE but with multiple toolchains installed for older compiler targets. You won’t even need the Build Tools.

To use these toolchains you need to install them in your chosen VS installation and then call vcvarsall.bat with an appropriate parameter.

You can even have no IDE installed if you don’t need it but only the Build Tools with the required toolchains. That’s useful when you use a different IDE like JetBrains Clion or Visual Studio Code. Note, however, that to be license- compliant, you still need a valid Visual Studio subscription.

Installing the toolchain

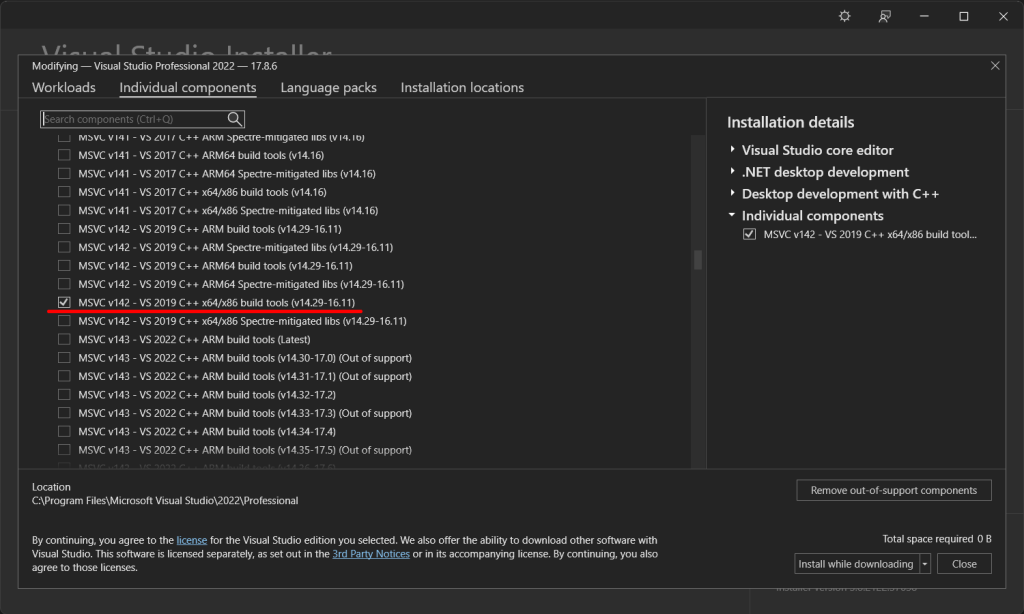

- Go to the Visual Studio Installer and click “Modify” on your main VS version (2022 in my case).

- Go to “Individual components” and search for the appropriate toolchain. For example, to get the latest VS2019 C++ compiler in VS 2022 installer, you need to look at this:

How do you know that 14.29 corresponds to VS 2019? Well, you have to consult this table: https://en.wikipedia.org/wiki/Microsoft_Visual_C%2B%2B#Internal_version_numbering – look at the “runtime library version” column as that’s the C++ compiler version really.

How do you know that 14.29 corresponds to VS 2019? Well, you have to consult this table: https://en.wikipedia.org/wiki/Microsoft_Visual_C%2B%2B#Internal_version_numbering – look at the “runtime library version” column as that’s the C++ compiler version really. - Finish the installation of the desired components.

Setting up the environment from cmd.exe

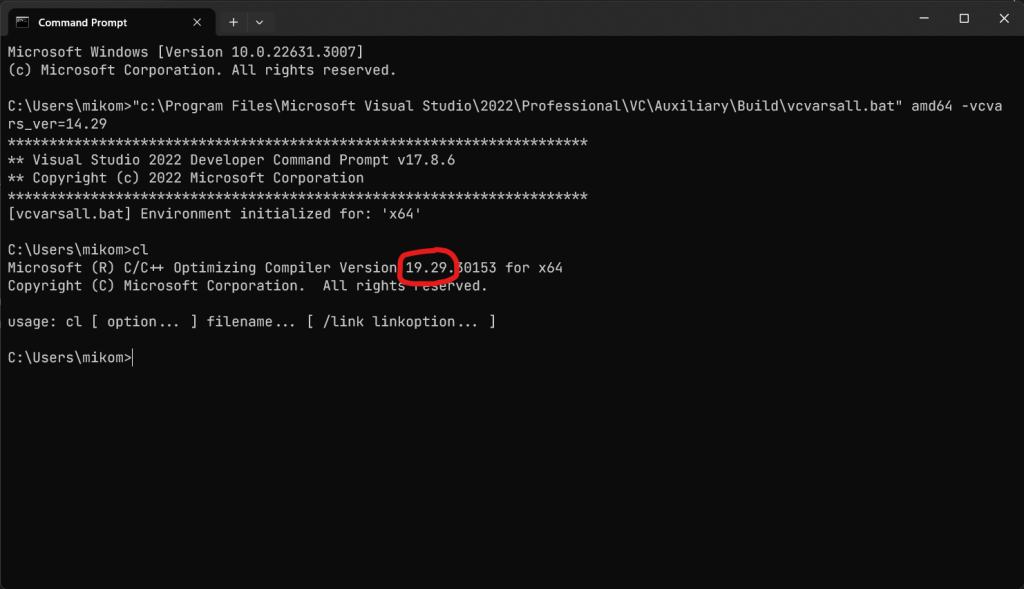

The only thing you need to do to start with a different toolchain is to pass an option to your vcvarsall.bat invocation:

C:\Users\mikom>"c:\Program Files\Microsoft Visual Studio\2022\Professional\VC\Auxiliary\Build\vcvarsall.bat" -vcvars_ver=14.29

With such a call, I get a shell where cl.exe indeed uses the VS2019 compiler variant:

As you can see, I called vcvarsall.bat from VS2022 yet I got VS2019 variant of the compiler For more info about vcvarsall.bat see: https://learn.microsoft.com/en-us/cpp/build/building-on-the-command-line

Setting up the environment for PowerShell

If you do your compilation in Powershell, vcvarsall.bat is not very helpful. It will spawn an underlying cmd.exe, set the necessary env vars inside it, and close it without altering your PowerShell environment.

(You may try to do some hacks of printing the environment in the child cmd.exe and adopting it to your Pwsh shell, but that’s a hack).

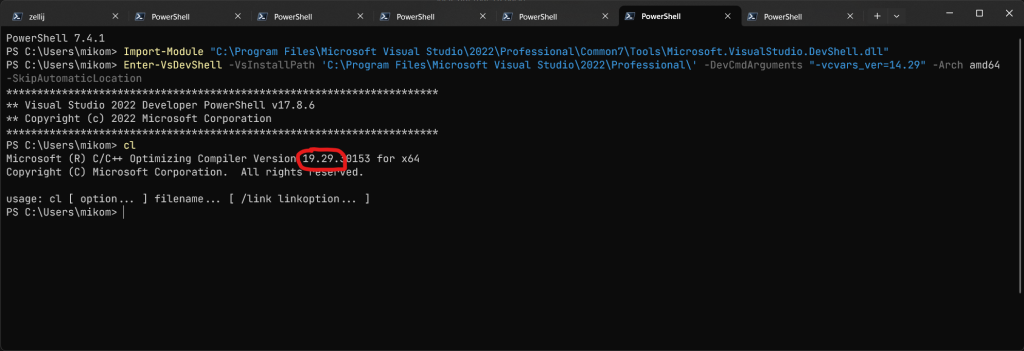

For setting up a development environment from PowerShell, Microsoft introduced a PowerShell module that does just that.

To get it, you first have to load the module:

Import-Module "C:\Program Files\Microsoft Visual Studio\2022\Professional\Common7\Tools\Microsoft.VisualStudio.DevShell.dll"

And then call the Enter-VsDevShell cmdlet with appropriate parameters:

Enter-VsDevShell -VsInstallPath 'C:\Program Files\Microsoft Visual Studio\2022\Professional\' -DevCmdArguments "-vcvars_ver=14.29" -Arch amd64 -SkipAutomaticLocation

This cmdlet internally passes arguments to vcvarsall.bat so you specify the toolchain version as above.

With this invocation, you get your desired cl.exe compiler:

For more info about Powershell DevShell module see: https://learn.microsoft.com/en-us/visualstudio/ide/reference/command-prompt-powershell

Setting up Qt Creator Kit with a toolchain

Unfortunately, Qt Creator doesn’t detect the toolchains in a single Visual Studio installation as multiple kits. You have to configure the compiler and the kit yourself:

I am a script, how do I know where Visual Studio is installed?

If you want to query the system for VS installation path programatically (to find either vcvarsall.bat or the DevShell PowerShell module) you can use the vswhere tool (https://github.com/microsoft/vswhere).

It’s a small-ish (0.5MB) self-contained .exe so you can just drop it in your repository and don’t care if it’s in the system. You can also install it with winget:

winget install vswhere

It queries the well-known registry entries and does some other magic to find out what Visual Studio installations your machine has.

By default it looks for the latest version of Visual Studio available and returns easily parseable key:value pairs with various infos about the installation. Most notably, the installation path in installationPath.

It also has various querying capabilities, like showing only the VS installation that have the C++ workload installed.

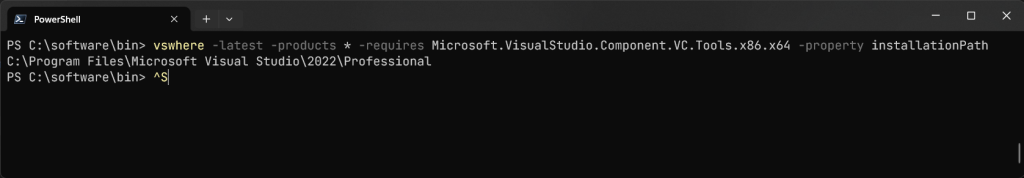

For example, to get the installation path of the newest Visual Studio with C++ workload, you call:

vswhere -latest -products * -requires Microsoft.VisualStudio.Component.VC.Tools.x86.x64 -property installationPath

Caveats

I haven’t found an easy way to query vcvarsall for something along the lines “give me the latest available toolchain in a given VS product line (2019, 2022 etc.)”. So if you call an explicit version (like 14.29) and a newer one appears, you will still be looking for the older one. However:

- When

vcvarsall.batis called without any toolchain parameter (vcvars_ver), it defaults to itself so you may assume that it’s the latest one in this installation folder. - Microsoft seems to stop bumping the relevant part of the version of the Visual Studio C++ compiler once the next version of VS is out. So for example it seems that

14.29will be a proper target for VS2019 C++ compiler until the end of time.

If you like this article and want to read similar material, consider subscribing via our RSS feed.

Subscribe to KDAB TV for similar informative short video content.

KDAB provides market leading software consulting and development services and training in Qt, C++ and 3D/OpenGL. Contact us.

The post Reducing Visual Studio Installations with Toolchains appeared first on KDAB.

Drag & Drop Widgets with PySide6 — Sort widgets visually with drag and drop in a container

I had an interesting question from a reader of my PySide6 book, about how to handle dragging and dropping of widgets in a container showing the dragged widget as it is moved.

I'm interested in managing movement of a QWidget with mouse in a container. I've implemented the application with drag & drop, exchanging the position of buttons, but I want to show the motion of

QPushButton, like what you see in Qt Designer. Dragging a widget should show the widget itself, not just the mouse pointer.

First, we'll implement the simple case which drags widgets without showing anything extra. Then we can extend it to answer the question. By the end of this quick tutorial we'll have a generic drag drop implementation which looks like the following.

Drag & Drop Widgets

We'll start with a simple application which creates a window using QWidget and places a series of QPushButton widgets into it.

You can substitute QPushButton for any other widget you like, e.g. QLabel. Any widget can have drag behavior implemented on it, although some input widgets will not work well as we capture the mouse events for the drag.

from PySide6.QtWidgets import QApplication, QHBoxLayout, QPushButton, QWidget

class Window(QWidget):

def __init__(self):

super().__init__()

self.blayout = QHBoxLayout()

for l in ["A", "B", "C", "D"]:

btn = QPushButton(l)

self.blayout.addWidget(btn)

self.setLayout(self.blayout)

app = QApplication([])

w = Window()

w.show()

app.exec()

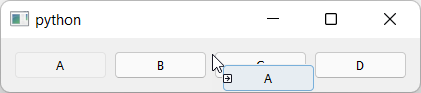

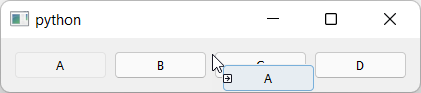

If you run this you should see something like this.

The series of

The series of QPushButton widgets in a horizontal layout.

Here we're creating a window, but the Window widget is subclassed from QWidget, meaning you can add this widget to any other layout. See later for an example of a generic object sorting widget.

QPushButton objects aren't usually draggable, so to handle the mouse movements and initiate a drag we need to implement a subclass. We can add the following to the top of the file.

from PySide6.QtCore import QMimeData, Qt

from PySide6.QtGui import QDrag

from PySide6.QtWidgets import QApplication, QHBoxLayout, QPushButton, QWidget

class DragButton(QPushButton):

def mouseMoveEvent(self, e):

if e.buttons() == Qt.MouseButton.LeftButton:

drag = QDrag(self)

mime = QMimeData()

drag.setMimeData(mime)

drag.exec(Qt.DropAction.MoveAction)

We implement a mouseMoveEvent which accepts the single e parameter of the event. We check to see if the left mouse button is pressed on this event -- as it would be when dragging -- and then initiate a drag. To start a drag, we create a QDrag object, passing in self to give us access later to the widget that was dragged. We also must pass in mime data. This is used for including information about what is dragged, particularly for passing data between applications. However, as here, it is fine to leave this empty.

Finally, we initiate a drag by calling drag.exec_(Qt.MoveAction). As with dialogs exec_() starts a new event loop, blocking the main loop until the drag is complete. The parameter Qt.MoveAction tells the drag handler what type of operation is happening, so it can show the appropriate icon tip to the user.

You can update the main window code to use our new DragButton class as follows.

class Window(QWidget):

def __init__(self):

super().__init__()

self.setAcceptDrops(True)

self.blayout = QHBoxLayout()

for l in ["A", "B", "C", "D"]:

btn = DragButton(l)

self.blayout.addWidget(btn)

self.setLayout(self.blayout)

def dragEnterEvent(self, e):

e.accept()

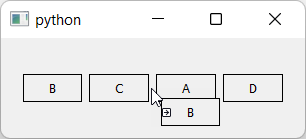

If you run the code now, you can drag the buttons, but you'll notice the drag is forbidden.

Dragging of the widget starts but is forbidden.

Dragging of the widget starts but is forbidden.

What's happening? The mouse movement is being detected by our DragButton object and the drag started, but the main window does not accept drag & drop.

To fix this we need to enable drops on the window and implement dragEnterEvent to actually accept them.

class Window(QWidget):

def __init__(self):

super().__init__()

self.setAcceptDrops(True)

self.blayout = QHBoxLayout()

for l in ["A", "B", "C", "D"]:

btn = DragButton(l)

self.blayout.addWidget(btn)

self.setLayout(self.blayout)

def dragEnterEvent(self, e):

e.accept()

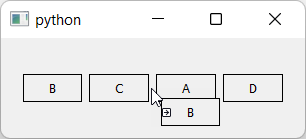

If you run this now, you'll see the drag is now accepted and you see the move icon. This indicates that the drag has started and been accepted by the window we're dragging onto. The icon shown is determined by the action we pass when calling drag.exec_().

Dragging of the widget starts and is accepted, showing a move icon.

Dragging of the widget starts and is accepted, showing a move icon.

Releasing the mouse button during a drag drop operation triggers a dropEvent on the widget you're currently hovering the mouse over (if it is configured to accept drops). In our case that's the window. To handle the move we need to implement the code to do this in our dropEvent method.

The drop event contains the position the mouse was at when the button was released & the drop triggered. We can use this to determine where to move the widget to.

To determine where to place the widget, we iterate over all the widgets in the layout, until we find one who's x position is greater than that of the mouse pointer. If so then when insert the widget directly to the left of this widget and exit the loop.

If we get to the end of the loop without finding a match, we must be dropping past the end of the existing items, so we increment n one further (in the else: block below).

def dropEvent(self, e):

pos = e.position()

widget = e.source()

self.blayout.removeWidget(widget)

for n in range(self.blayout.count()):

# Get the widget at each index in turn.

w = self.blayout.itemAt(n).widget()

if pos.x() < w.x():

# We didn't drag past this widget.

# insert to the left of it.

break

else:

# We aren't on the left hand side of any widget,

# so we're at the end. Increment 1 to insert after.

n += 1

self.blayout.insertWidget(n, widget)

e.accept()

The effect of this is that if you drag 1 pixel past the start of another widget the drop will happen to the right of it, which is a bit confusing. To fix this we can adjust the cut off to use the middle of the widget using if pos.x() < w.x() + w.size().width() // 2: -- that is x + half of the width.

def dropEvent(self, e):

pos = e.position()

widget = e.source()

self.blayout.removeWidget(widget)

for n in range(self.blayout.count()):

# Get the widget at each index in turn.

w = self.blayout.itemAt(n).widget()

if pos.x() < w.x() + w.size().width() // 2:

# We didn't drag past this widget.

# insert to the left of it.

break

else:

# We aren't on the left hand side of any widget,

# so we're at the end. Increment 1 to insert after.

n += 1

self.blayout.insertWidget(n, widget)

e.accept()

The complete working drag-drop code is shown below.

from PySide6.QtCore import QMimeData, Qt

from PySide6.QtGui import QDrag

from PySide6.QtWidgets import QApplication, QHBoxLayout, QPushButton, QWidget

class DragButton(QPushButton):

def mouseMoveEvent(self, e):

if e.buttons() == Qt.MouseButton.LeftButton:

drag = QDrag(self)

mime = QMimeData()

drag.setMimeData(mime)

drag.exec(Qt.DropAction.MoveAction)

class Window(QWidget):

def __init__(self):

super().__init__()

self.setAcceptDrops(True)

self.blayout = QHBoxLayout()

for l in ["A", "B", "C", "D"]:

btn = DragButton(l)

self.blayout.addWidget(btn)

self.setLayout(self.blayout)

def dragEnterEvent(self, e):

e.accept()

def dropEvent(self, e):

pos = e.position()

widget = e.source()

self.blayout.removeWidget(widget)

for n in range(self.blayout.count()):

# Get the widget at each index in turn.

w = self.blayout.itemAt(n).widget()

if pos.x() < w.x() + w.size().width() // 2:

# We didn't drag past this widget.

# insert to the left of it.

break

else:

# We aren't on the left hand side of any widget,

# so we're at the end. Increment 1 to insert after.

n += 1

self.blayout.insertWidget(n, widget)

e.accept()

app = QApplication([])

w = Window()

w.show()

app.exec()

Visual Drag & Drop

We now have a working drag & drop implementation. Next we'll move onto improving the UX by showing the drag visually. First we'll add support for showing the button being dragged next to the mouse point as it is dragged. That way the user knows exactly what it is they are dragging.

Qt's QDrag handler natively provides a mechanism for showing dragged objects which we can use. We can update our DragButton class to pass a pixmap image to QDrag and this will be displayed under the mouse pointer as the drag occurs. To show the widget, we just need to get a QPixmap of the widget we're dragging.

from PySide6.QtCore import QMimeData, Qt

from PySide6.QtGui import QDrag

from PySide6.QtWidgets import QApplication, QHBoxLayout, QPushButton, QWidget

class DragButton(QPushButton):

def mouseMoveEvent(self, e):

if e.buttons() == Qt.MouseButton.LeftButton:

drag = QDrag(self)

mime = QMimeData()

drag.setMimeData(mime)

drag.exec(Qt.DropAction.MoveAction)

To create the pixmap we create a QPixmap object passing in the size of the widget this event is fired on with self.size(). This creates an empty pixmap which we can then pass into self.render to render -- or draw -- the current widget onto it. That's it. Then we set the resulting pixmap on the drag object.

If you run the code with this modification you'll see something like the following --

Dragging of the widget showing the dragged widget.

Dragging of the widget showing the dragged widget.

Generic Drag & Drop Container

We now have a working drag and drop behavior implemented on our window. We can take this a step further and implement a generic drag drop widget which allows us to sort arbitrary objects. In the code below we've created a new widget DragWidget which can be added to any window.

You can add items -- instances of DragItem -- which you want to be sorted, as well as setting data on them. When items are re-ordered the new order is emitted as a signal orderChanged.

from PySide6.QtCore import QMimeData, Qt, Signal

from PySide6.QtGui import QDrag, QPixmap

from PySide6.QtWidgets import (

QApplication,

QHBoxLayout,

QLabel,

QMainWindow,

QVBoxLayout,

QWidget,

)

class DragItem(QLabel):

def __init__(self, *args, **kwargs):

super().__init__(*args, **kwargs)

self.setContentsMargins(25, 5, 25, 5)

self.setAlignment(Qt.AlignmentFlag.AlignCenter)

self.setStyleSheet("border: 1px solid black;")

# Store data separately from display label, but use label for default.

self.data = self.text()

def set_data(self, data):

self.data = data

def mouseMoveEvent(self, e):

if e.buttons() == Qt.MouseButton.LeftButton:

drag = QDrag(self)

mime = QMimeData()

drag.setMimeData(mime)

pixmap = QPixmap(self.size())

self.render(pixmap)

drag.setPixmap(pixmap)

drag.exec(Qt.DropAction.MoveAction)

class DragWidget(QWidget):

"""

Generic list sorting handler.

"""

orderChanged = Signal(list)

def __init__(self, *args, orientation=Qt.Orientation.Vertical, **kwargs):

super().__init__()

self.setAcceptDrops(True)

# Store the orientation for drag checks later.

self.orientation = orientation

if self.orientation == Qt.Orientation.Vertical:

self.blayout = QVBoxLayout()

else:

self.blayout = QHBoxLayout()

self.setLayout(self.blayout)

def dragEnterEvent(self, e):

e.accept()

def dropEvent(self, e):

pos = e.position()

widget = e.source()

self.blayout.removeWidget(widget)

for n in range(self.blayout.count()):

# Get the widget at each index in turn.

w = self.blayout.itemAt(n).widget()

if self.orientation == Qt.Orientation.Vertical:

# Drag drop vertically.

drop_here = pos.y() < w.y() + w.size().height() // 2

else:

# Drag drop horizontally.

drop_here = pos.x() < w.x() + w.size().width() // 2

if drop_here:

break

else:

# We aren't on the left hand/upper side of any widget,

# so we're at the end. Increment 1 to insert after.

n += 1

self.blayout.insertWidget(n, widget)

self.orderChanged.emit(self.get_item_data())

e.accept()

def add_item(self, item):

self.blayout.addWidget(item)

def get_item_data(self):

data = []

for n in range(self.blayout.count()):

# Get the widget at each index in turn.

w = self.blayout.itemAt(n).widget()

data.append(w.data)

return data

class MainWindow(QMainWindow):

def __init__(self):

super().__init__()

self.drag = DragWidget(orientation=Qt.Orientation.Vertical)

for n, l in enumerate(["A", "B", "C", "D"]):

item = DragItem(l)

item.set_data(n) # Store the data.

self.drag.add_item(item)

# Print out the changed order.

self.drag.orderChanged.connect(print)

container = QWidget()

layout = QVBoxLayout()

layout.addStretch(1)

layout.addWidget(self.drag)

layout.addStretch(1)

container.setLayout(layout)

self.setCentralWidget(container)

app = QApplication([])

w = MainWindow()

w.show()

app.exec()

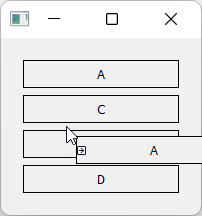

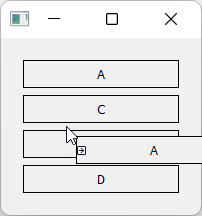

Generic drag-drop sorting in horizontal orientation.

Generic drag-drop sorting in horizontal orientation.

You'll notice that when creating the item, you can set the label by passing it in as a parameter (just like for a normal QLabel which we've subclassed from). But you can also set a data value, which is the internal value of this item -- this is what will be emitted when the order changes, or if you call get_item_data yourself. This separates the visual representation from what is actually being sorted, meaning you can use this to sort anything not just strings.

In the example above we're passing in the enumerated index as the data, so dragging will output (via the print connected to orderChanged) something like:

[1, 0, 2, 3]

[1, 2, 0, 3]

[1, 0, 2, 3]

[1, 2, 0, 3]

If you remove the item.set_data(n) you'll see the labels emitted on changes.

['B', 'A', 'C', 'D']

['B', 'C', 'A', 'D']

We've also implemented orientation onto the DragWidget using the Qt built in flags Qt.Orientation.Vertical or Qt.Orientation.Horizontal. This setting this allows you sort items either vertically or horizontally -- the calculations are handled for both directions.

Generic drag-drop sorting in vertical orientation.

Generic drag-drop sorting in vertical orientation.

Adding a Visual Drop Target

If you experiment with the drag-drop tool above you'll notice that it doesn't feel completely intuitive. When dragging you don't know where an item will be inserted until you drop it. If it ends up in the wrong place, you'll then need to pick it up and re-drop it again, using guesswork to get it right.

With a bit of practice you can get the hang of it, but it would be nicer to make the behavior immediately obvious for users. Many drag-drop interfaces solve this problem by showing a preview of where the item will be dropped while dragging -- either by showing the item in the place where it will be dropped, or showing some kind of placeholder.

In this final section we'll implement this type of drag and drop preview indicator.

The first step is to define our target indicator. This is just another label, which in our example is empty, with custom styles applied to make it have a solid "shadow" like background. This makes it obviously different to the items in the list, so it stands out as something distinct.

from PySide6.QtCore import QMimeData, Qt, Signal

from PySide6.QtGui import QDrag, QPixmap

from PySide6.QtWidgets import (

QApplication,

QHBoxLayout,

QLabel,

QMainWindow,

QVBoxLayout,

QWidget,

)

class DragTargetIndicator(QLabel):

def __init__(self, parent=None):

super().__init__(parent)

self.setContentsMargins(25, 5, 25, 5)

self.setStyleSheet(

"QLabel { background-color: #ccc; border: 1px solid black; }"

)

We've copied the contents margins from the items in the list. If you change your list items, remember to also update the indicator dimensions to match.

The drag item is unchanged, but we need to implement some additional behavior on our DragWidget to add the target, control showing and moving it.

First we'll add the drag target indicator to the layout on our DragWidget. This is hidden to begin with, but will be shown during the drag.

class DragWidget(QWidget):

"""

Generic list sorting handler.

"""

orderChanged = Signal(list)

def __init__(self, *args, orientation=Qt.Orientation.Vertical, **kwargs):

super().__init__()

self.setAcceptDrops(True)

# Store the orientation for drag checks later.

self.orientation = orientation

if self.orientation == Qt.Orientation.Vertical:

self.blayout = QVBoxLayout()

else:

self.blayout = QHBoxLayout()

# Add the drag target indicator. This is invisible by default,

# we show it and move it around while the drag is active.

self._drag_target_indicator = DragTargetIndicator()

self.blayout.addWidget(self._drag_target_indicator)

self._drag_target_indicator.hide()

self.setLayout(self.blayout)

Next we modify the DragWidget.dragMoveEvent to show the drag target indicator. We show it by inserting it into the layout and then calling .show -- inserting a widget which is already in a layout will move it. We also hide the original item which is being dragged.

In the earlier examples we determined the position on drop by removing the widget being dragged, and then iterating over what is left. Because we now need to calculate the drop location before the drop, we take a different approach.

If we wanted to do it the same way, we'd need to remove the item on drag start, hold onto it and implement re-inserting at it's old position on drag fail. That's a lot of work.

Instead, the dragged item is left in place and hidden during move.

def dragMoveEvent(self, e):

# Find the correct location of the drop target, so we can move it there.

index = self._find_drop_location(e)

if index is not None:

# Inserting moves the item if its alreaady in the layout.

self.blayout.insertWidget(index, self._drag_target_indicator)

# Hide the item being dragged.

e.source().hide()

# Show the target.

self._drag_target_indicator.show()

e.accept()

The method self._find_drop_location finds the index where the drag target will be shown (or the item dropped when the mouse released). We'll implement that next.

The calculation of the drop location follows the same pattern as before. We iterate over the items in the layout and calculate whether our mouse drop location is to the left of each widget. If it isn't to the left of any widget, we drop on the far right.

def _find_drop_location(self, e):

pos = e.position()

spacing = self.blayout.spacing() / 2

for n in range(self.blayout.count()):

# Get the widget at each index in turn.

w = self.blayout.itemAt(n).widget()

if self.orientation == Qt.Orientation.Vertical:

# Drag drop vertically.

drop_here = (

pos.y() >= w.y() - spacing

and pos.y() <= w.y() + w.size().height() + spacing

)

else:

# Drag drop horizontally.

drop_here = (

pos.x() >= w.x() - spacing

and pos.x() <= w.x() + w.size().width() + spacing

)

if drop_here:

# Drop over this target.

break

return n

The drop location n is returned for use in the dragMoveEvent to place the drop target indicator.

Next wee need to update the get_item_data handler to ignore the drop target indicator. To do this we check w against self._drag_target_indicator and skip if it is the same. With this change the method will work as expected.

def get_item_data(self):

data = []

for n in range(self.blayout.count()):

# Get the widget at each index in turn.

w = self.blayout.itemAt(n).widget()

if w != self._drag_target_indicator:

# The target indicator has no data.

data.append(w.data)

return data

If you run the code a this point the drag behavior will work as expected. But if you drag the widget outside of the window and drop you'll notice a problem: the target indicator will stay in place, but dropping the item won't drop the item in that position (the drop will be cancelled).

To fix that we need to implement a dragLeaveEvent which hides the indicator.

def dragLeaveEvent(self, e):

self._drag_target_indicator.hide()

e.accept()

With those changes, the drag-drop behavior should be working as intended. The complete code is shown below.

from PySide6.QtCore import QMimeData, Qt, Signal

from PySide6.QtGui import QDrag, QPixmap

from PySide6.QtWidgets import (

QApplication,

QHBoxLayout,

QLabel,

QMainWindow,

QVBoxLayout,

QWidget,

)

class DragTargetIndicator(QLabel):

def __init__(self, parent=None):

super().__init__(parent)

self.setContentsMargins(25, 5, 25, 5)

self.setStyleSheet(

"QLabel { background-color: #ccc; border: 1px solid black; }"

)

class DragItem(QLabel):

def __init__(self, *args, **kwargs):

super().__init__(*args, **kwargs)

self.setContentsMargins(25, 5, 25, 5)

self.setAlignment(Qt.AlignmentFlag.AlignCenter)

self.setStyleSheet("border: 1px solid black;")

# Store data separately from display label, but use label for default.

self.data = self.text()

def set_data(self, data):

self.data = data

def mouseMoveEvent(self, e):

if e.buttons() == Qt.MouseButton.LeftButton:

drag = QDrag(self)

mime = QMimeData()

drag.setMimeData(mime)

pixmap = QPixmap(self.size())

self.render(pixmap)

drag.setPixmap(pixmap)

drag.exec(Qt.DropAction.MoveAction)

self.show() # Show this widget again, if it's dropped outside.

class DragWidget(QWidget):

"""

Generic list sorting handler.

"""

orderChanged = Signal(list)

def __init__(self, *args, orientation=Qt.Orientation.Vertical, **kwargs):

super().__init__()

self.setAcceptDrops(True)

# Store the orientation for drag checks later.

self.orientation = orientation

if self.orientation == Qt.Orientation.Vertical:

self.blayout = QVBoxLayout()

else:

self.blayout = QHBoxLayout()

# Add the drag target indicator. This is invisible by default,

# we show it and move it around while the drag is active.

self._drag_target_indicator = DragTargetIndicator()

self.blayout.addWidget(self._drag_target_indicator)

self._drag_target_indicator.hide()

self.setLayout(self.blayout)

def dragEnterEvent(self, e):

e.accept()

def dragLeaveEvent(self, e):

self._drag_target_indicator.hide()

e.accept()

def dragMoveEvent(self, e):

# Find the correct location of the drop target, so we can move it there.

index = self._find_drop_location(e)

if index is not None:

# Inserting moves the item if its alreaady in the layout.

self.blayout.insertWidget(index, self._drag_target_indicator)

# Hide the item being dragged.

e.source().hide()

# Show the target.

self._drag_target_indicator.show()

e.accept()

def dropEvent(self, e):

widget = e.source()

# Use drop target location for destination, then remove it.

self._drag_target_indicator.hide()

index = self.blayout.indexOf(self._drag_target_indicator)

if index is not None:

self.blayout.insertWidget(index, widget)

self.orderChanged.emit(self.get_item_data())

widget.show()

self.blayout.activate()

e.accept()

def _find_drop_location(self, e):

pos = e.position()

spacing = self.blayout.spacing() / 2

for n in range(self.blayout.count()):

# Get the widget at each index in turn.

w = self.blayout.itemAt(n).widget()

if self.orientation == Qt.Orientation.Vertical:

# Drag drop vertically.

drop_here = (

pos.y() >= w.y() - spacing

and pos.y() <= w.y() + w.size().height() + spacing

)

else:

# Drag drop horizontally.

drop_here = (

pos.x() >= w.x() - spacing

and pos.x() <= w.x() + w.size().width() + spacing

)

if drop_here:

# Drop over this target.

break

return n

def add_item(self, item):

self.blayout.addWidget(item)

def get_item_data(self):

data = []

for n in range(self.blayout.count()):

# Get the widget at each index in turn.

w = self.blayout.itemAt(n).widget()

if w != self._drag_target_indicator:

# The target indicator has no data.

data.append(w.data)

return data

class MainWindow(QMainWindow):

def __init__(self):

super().__init__()

self.drag = DragWidget(orientation=Qt.Orientation.Vertical)

for n, l in enumerate(["A", "B", "C", "D"]):

item = DragItem(l)

item.set_data(n) # Store the data.

self.drag.add_item(item)

# Print out the changed order.

self.drag.orderChanged.connect(print)

container = QWidget()

layout = QVBoxLayout()

layout.addStretch(1)

layout.addWidget(self.drag)

layout.addStretch(1)

container.setLayout(layout)

self.setCentralWidget(container)

app = QApplication([])

w = MainWindow()

w.show()

app.exec()

If you run this example on macOS you may notice that the widget drag preview (the QPixmap created on DragItem) is a bit blurry. On high-resolution screens you need to set the device pixel ratio and scale up the pixmap when you create it. Below is a modified DragItem class which does this.

Update DragItem to support high resolution screens.

class DragItem(QLabel):

def __init__(self, *args, **kwargs):

super().__init__(*args, **kwargs)

self.setContentsMargins(25, 5, 25, 5)

self.setAlignment(Qt.AlignmentFlag.AlignCenter)

self.setStyleSheet("border: 1px solid black;")

# Store data separately from display label, but use label for default.

self.data = self.text()

def set_data(self, data):

self.data = data

def mouseMoveEvent(self, e):

if e.buttons() == Qt.MouseButton.LeftButton:

drag = QDrag(self)

mime = QMimeData()

drag.setMimeData(mime)

# Render at x2 pixel ratio to avoid blur on Retina screens.

pixmap = QPixmap(self.size().width() * 2, self.size().height() * 2)

pixmap.setDevicePixelRatio(2)

self.render(pixmap)

drag.setPixmap(pixmap)

drag.exec(Qt.DropAction.MoveAction)

self.show() # Show this widget again, if it's dropped outside.

That's it! We've created a generic drag-drop handled which can be added to any projects where you need to be able to reposition items within a list. You should feel free to experiment with the styling of the drag items and targets as this won't affect the behavior.

Incredibly Simple QR Generation in QML

The Need for Simple & Modular QR Generation in QML

Recently, our designer Nuno Pinheiro needed to generate QR codes for an Android app in QML and started asking around about a simple way to do this. The best existing QML solution was QZXing, a Qt/QML wrapper for the 1D/2D barcode image processing library ZXing. He felt this was too much.

QZXing is quite a large and feature-rich library and is a great choice for something that requires a lot more rigorous work with encoding and decoding barcodes and QR. However, this application wasn’t focused around barcode processing. It just needed to display a few QR codes below its other content; it didn’t need something so heavy-duty. It seemed like too much work to build and link this library and register QML types in C++ if there was something simpler available.

Finding A JavaScript Library to Wrap in QML

There are plenty of minimal QR Code libraries in JS, and JS files can be imported natively in QML. Why not just slap a minified JS file into our Qt resources and expose its functionality through a QML object? No compiling libraries, no CMake, simple setup for a simple task.

My colleague, the one and only Javier O. Cordero Pérez attempted to tackle this first using QRCode.js. He found a few issues, which I’ll let him explain. This is what Javier contributed:

Why Most Browser Libraries Don’t Work With QML

Not all ECMAScript or JavaScript environments are created equal. QML, for example, doesn’t have a DOM (Document Object Model) that represents the contents on screen. That feature comes from HTML, so when a JS library designed for use in the browser attempts to access the DOM from QML, it can’t find these APIs. This limits the use of JS libraries in QML to business logic. Frontend JS libaries would have to be ported to QML in order to work.

Note to those concerned with performance: At the time of writing, JS data structures, and many JS and C++ design patterns don’t optimize well in QML code when using QML compilers. You should use C++ for backend code if you work in embedded or performance is a concern for you. Even JavaScript libraries have started a trend of moving away from pure JS in favor of Rust and WASM for backend code. Having said that, we cannot understate the convenience of having JS or QML modules or libraries you can simply plug and play. This is why we did this in the first place.

In my first approach to using qrcodejs, I tried using the library from within a QML slot (Component.onCompleted) and found that QRCode.js calls document.documentElement, document.getElementById, document.documentElement, and document.createElement, which are undefined, because document is typically an HTMLDocument, part of the HTML DOM API.

I then began attempting to refactor the code, but quickly realized there was no easy way to get the library to use QtQuick’s Canvas element. I knew from past experiences that Canvas performs very poorly on Android, so, being pressed for time and Android being our target platform, I came up with a different solution.

Embedding and Communicating With A Browser View

My second approach was to give QRCode.js a browser to work with. I chose to use QtWebView, because on mobile, it displays web content using the operating system’s web view.

To keep things simple, I sent the QRCode’s data to the web page by encoding it to a safe character space using Base64 encoding and passing the result as a URL attribute. This attribute is then decoded inside the page and then sent to the library to generate a QR code on the Canvas. The WebView dimensions are also passed as attributes so the image can be produced at the width of the shortest side.

This is what my solution looked like at this point:

import QtQuick 2.15

import QtQuick.Window 2.15

Window {

id: document

QRCode {

id: qr

text: "https://kdab.com/"

anchors.centerIn: parent

// The smallest dimension determines and fixes QR code size

width: 400

height: 600

}

width: 640

height: 480

visible: true

title: qsTr("Web based embedded QR Code")

}

// QRCode.qml

import QtQuick 2.15

import QtWebView 1.15

Item {

required property string text

// Due to platform limitations, overlapping the WebView with other QML components is not supported.

// Doing this will have unpredictable results which may differ from platform to platform.

WebView {

id: document

// String is encoded using base64 and transfered through page URL

url: "qrc:///qr-loader.html?w=" + width + "&t=" + Qt.btoa(text)

// Keep view dimensions to a minimum

width: parent.width < parent.height ? parent.width : parent.height

height: parent.height < parent.width ? parent.height : parent.width

anchors.centerIn: parent

// Note: To update the contents after the page has loaded, we could expand upon this

// by calling runJavaScript(script: string, callback: var) from the WebView component.

// Any method attributes, such as dimensions or the QR Code’s contents would have to

// be concatenated inside the script parameter.

}

}

// qr-loader.html>

<html>

<head>

<meta http-equiv="Content-Type" content="text/html; charset=UTF-8">

<style>

body {

margin: 0;

padding: 0;

}

#qr {

width: 100%;

height: 100%;

margin: auto;

}

</style>

</head>

<body>

<h1>u</h1>

<div id="q"></div>

<script src="jquery.min.js"></script>

<script src="qrcode.min.js"></script>

<script>

function generateQRCode() {

const s = new URLSearchParams(document.location.search);

const w = Number(s.get("w"));

const t = atob(s.get("t"));

new QRCode(document.getElementById("q"), {

text: t,

width: w,

height: w

});

}

generateQRCode();

</script>

</body>

Why You Should Avoid QtWebView On Mobile

If you read the comments in the code, you’ll notice that “due to platform limitations, overlapping the WebView with other QML components is not supported. Doing this will have unpredictable results which may differ from platform to platform.” Additionally, there is so much overhead in loading an embedded site that you can see the exact moment the QR code appears on screen.

Unfortunately for Nuno, QtWebView is unable to load pages embedded as Qt resources on mobile systems. This is because by default, Qt resources become a part of the app’s binary, which can’t be read by the embedded browser. If the site was stored online or the app hosted its own web server, we could load our resources from there. Since this app didn’t do either of those things, all resources had to be copied into a temporary folder and accessed via the file:// protocol. Even then, the embedded browser would fail to locate or load the resources, making it necessary to inline all of our resources into the HTML for this to work.

As you can see, what started as a simple way to use a JS library on the desktop, quickly became cumbersome and difficult to maintain for mobile devices. Given more time, I would’ve chosen to instead re-implement QRCode.js's algorithm using QtQuick’s Shapes API. The Shapes API would allow the QR code to be rendered in a single pass of the scene graph.

Fortunately, there’s a better, simpler and more practical solution. I will defer back to Matt here, who figured it out:

Proper JS in QML Solution

I decided to expand on Javier’s idea and try qrcode-svg. This library uses a modified version of QRCode.js and enables creation of an SVG string from the QR Code data.

Here’s an example snipped from the project’s README:

var qrcode = new QRCode({

content: "Hello World!",

container: "svg-viewbox", // Responsive use

join: true // Crisp rendering and 4-5x reduced file size

});

var svg = qrcode.svg();

Since the data is SVG, it can be used with QML’s Image item natively by transforming it into a data URI and using that as the source for the image. There’s no need to write or read anything to disk, just append the string to "data:image/svg+xml;utf8," and use that as the source file.

Starting Our Wrapper

We can just wrap the function call up in a QML type, called QR, and use that wherever we need a QR code. Let’s make a ridiculously basic QtObject that takes a content string and uses the library to produce an SVG:

// QR.qml

import QtQuick

import "qrcode.min.js" as QrSvg

QtObject {

id: root

required property string content

property string svgString: ""

Component.onCompleted: {

root.svgString = new QrSvg.QRCode({

content: root.content

}).svg()

}

}

So, whenever we make a QR object, the string bound to content is used to make the SVG and store it in svgString. Then we can render it in an Image item:

// example.qml

import QtQuick

import QtQuick.Window

Window {

visible: true

QR {

id: qrObj

content: "hello QR!"

}

Image {

source: "data:image/svg+xml;utf8," + qrObj.svgString

}

}

This is basically effortless and works like a charm.

Finishing Up The Wrapper

Now let’s completely wrap the QRCode constructor, so all the options from qrcode-svg are exposed by our QML object. We just need to set all options in the constructor through QML properties and give all the unrequired properties default values.

While we’re at it, let’s go ahead and connect to onContentChanged, so we can refresh the SVG automatically when the content changes.

// QR.qml

import QtQuick

import "qrcode.min.js" as QrSvg

QtObject {

id: root

required property string content

property int padding: 4

property int width: 256

property int height: 256

property string color: "black"

property string background: "white"

property string ecl: "M"

property bool join: false

property bool predefined: false

property bool pretty: true

property bool swap: false

property bool xmlDeclaration: true

property string container: "svg"

property string svgString: ""

function createSvgString() {

root.svgString = new QrSvg.QRCode({

content: root.content,

padding: root.padding,

width: root.width,

height: root.height,

color: root.color,

background: root.background,

ecl: root.ecl,

join: root.join,

predefined: root.predefined,

pretty: root.pretty,

swap: root.swap,

xmlDeclaration: root.xmlDeclaration,

container: root.container

}).svg()

}

onContentChanged: createSvgString()

Component.onCompleted: createSvgString()

}

Nice and Easy

With these 45 lines of QML and the minified JS file, we have a QML wrapper for the library. Now any arbitrary QML project can include these two files and generate any QR Code that qrcode-svg can make.

Here I use it to re-generate a QR code as you type the content into a TextInput:

// example.qml

import QtQuick

import QtQuick.Window

import QtQuick.Controls

Window {

id: root

visible: true

QR {

id: qrObj

content: txtField.text

join: true

}

TextField {

id: txtField

width: parent.width

}

Image {

anchors.top: txtField.bottom

source: (qrObj.svgString === "")

? ""

: ("data:image/svg+xml;utf8," + qrObj.svgString)

}

}

This runs well when deployed on Android, and the image re-renders on content change in under 30 milliseconds, sometimes as low as 7.

Hopefully this code will be useful to those looking for the simplest no-frills method to generate a QR code in QML, and maybe the post can inspire other QML developers who feel like they’re overcomplicating something really simple.

The solution associated with this post is available in a GitHub repo linked here, so it can be used for your projects and tweaked if needed. There is also a branch that contains the code for Javier's alternate solution, available here.

Note: Nuno settled on QZXing before we got a chance to show him this solution, and was so frustrated about not having it earlier that he made us write this blog post

If you like this article and want to read similar material, consider subscribing via our RSS feed.

Subscribe to KDAB TV for similar informative short video content.

KDAB provides market leading software consulting and development services and training in Qt, C++ and 3D/OpenGL. Contact us.

The post Incredibly Simple QR Generation in QML appeared first on KDAB.

Drag & Drop Widgets with PyQt6 — Sort widgets visually with drag and drop in a container

I had an interesting question from a reader of my PyQt6 book, about how to handle dragging and dropping of widgets in a container showing the dragged widget as it is moved.

I'm interested in managing movement of a QWidget with mouse in a container. I've implemented the application with drag & drop, exchanging the position of buttons, but I want to show the motion of

QPushButton, like what you see in Qt Designer. Dragging a widget should show the widget itself, not just the mouse pointer.

First, we'll implement the simple case which drags widgets without showing anything extra. Then we can extend it to answer the question. By the end of this quick tutorial we'll have a generic drag drop implementation which looks like the following.

Drag & Drop Widgets

We'll start with a simple application which creates a window using QWidget and places a series of QPushButton widgets into it.

You can substitute QPushButton for any other widget you like, e.g. QLabel. Any widget can have drag behavior implemented on it, although some input widgets will not work well as we capture the mouse events for the drag.

from PyQt6.QtWidgets import QApplication, QHBoxLayout, QPushButton, QWidget

class Window(QWidget):

def __init__(self):

super().__init__()

self.blayout = QHBoxLayout()

for l in ["A", "B", "C", "D"]:

btn = QPushButton(l)

self.blayout.addWidget(btn)

self.setLayout(self.blayout)

app = QApplication([])

w = Window()

w.show()

app.exec()

If you run this you should see something like this.

The series of

The series of QPushButton widgets in a horizontal layout.

Here we're creating a window, but the Window widget is subclassed from QWidget, meaning you can add this widget to any other layout. See later for an example of a generic object sorting widget.

QPushButton objects aren't usually draggable, so to handle the mouse movements and initiate a drag we need to implement a subclass. We can add the following to the top of the file.

from PyQt6.QtCore import QMimeData, Qt

from PyQt6.QtGui import QDrag

from PyQt6.QtWidgets import QApplication, QHBoxLayout, QPushButton, QWidget

class DragButton(QPushButton):

def mouseMoveEvent(self, e):

if e.buttons() == Qt.MouseButton.LeftButton:

drag = QDrag(self)

mime = QMimeData()

drag.setMimeData(mime)

drag.exec(Qt.DropAction.MoveAction)

We implement a mouseMoveEvent which accepts the single e parameter of the event. We check to see if the left mouse button is pressed on this event -- as it would be when dragging -- and then initiate a drag. To start a drag, we create a QDrag object, passing in self to give us access later to the widget that was dragged. We also must pass in mime data. This is used for including information about what is dragged, particularly for passing data between applications. However, as here, it is fine to leave this empty.

Finally, we initiate a drag by calling drag.exec_(Qt.MoveAction). As with dialogs exec_() starts a new event loop, blocking the main loop until the drag is complete. The parameter Qt.MoveAction tells the drag handler what type of operation is happening, so it can show the appropriate icon tip to the user.

You can update the main window code to use our new DragButton class as follows.

class Window(QWidget):

def __init__(self):

super().__init__()

self.blayout = QHBoxLayout()

for l in ["A", "B", "C", "D"]:

btn = DragButton(l)

self.blayout.addWidget(btn)

self.setLayout(self.blayout)

If you run the code now, you can drag the buttons, but you'll notice the drag is forbidden.

Dragging of the widget starts but is forbidden.

Dragging of the widget starts but is forbidden.

What's happening? The mouse movement is being detected by our DragButton object and the drag started, but the main window does not accept drag & drop.

To fix this we need to enable drops on the window and implement dragEnterEvent to actually accept them.

class Window(QWidget):

def __init__(self):

super().__init__()

self.setAcceptDrops(True)

self.blayout = QHBoxLayout()

for l in ["A", "B", "C", "D"]:

btn = DragButton(l)

self.blayout.addWidget(btn)

self.setLayout(self.blayout)

def dragEnterEvent(self, e):

e.accept()

If you run this now, you'll see the drag is now accepted and you see the move icon. This indicates that the drag has started and been accepted by the window we're dragging onto. The icon shown is determined by the action we pass when calling drag.exec_().

Dragging of the widget starts and is accepted, showing a move icon.

Dragging of the widget starts and is accepted, showing a move icon.

Releasing the mouse button during a drag drop operation triggers a dropEvent on the widget you're currently hovering the mouse over (if it is configured to accept drops). In our case that's the window. To handle the move we need to implement the code to do this in our dropEvent method.

The drop event contains the position the mouse was at when the button was released & the drop triggered. We can use this to determine where to move the widget to.

To determine where to place the widget, we iterate over all the widgets in the layout, until we find one who's x position is greater than that of the mouse pointer. If so then when insert the widget directly to the left of this widget and exit the loop.

If we get to the end of the loop without finding a match, we must be dropping past the end of the existing items, so we increment n one further (in the else: block below).

def dropEvent(self, e):

pos = e.position()

widget = e.source()

self.blayout.removeWidget(widget)

for n in range(self.blayout.count()):

# Get the widget at each index in turn.

w = self.blayout.itemAt(n).widget()

if pos.x() < w.x():

# We didn't drag past this widget.

# insert to the left of it.

break

else:

# We aren't on the left hand side of any widget,

# so we're at the end. Increment 1 to insert after.

n += 1

self.blayout.insertWidget(n, widget)

e.accept()

The effect of this is that if you drag 1 pixel past the start of another widget the drop will happen to the right of it, which is a bit confusing. To fix this we can adjust the cut off to use the middle of the widget using if pos.x() < w.x() + w.size().width() // 2: -- that is x + half of the width.

def dropEvent(self, e):

pos = e.position()

widget = e.source()

self.blayout.removeWidget(widget)

for n in range(self.blayout.count()):

# Get the widget at each index in turn.

w = self.blayout.itemAt(n).widget()

if pos.x() < w.x() + w.size().width() // 2:

# We didn't drag past this widget.

# insert to the left of it.

break

else:

# We aren't on the left hand side of any widget,

# so we're at the end. Increment 1 to insert after.

n += 1

self.blayout.insertWidget(n, widget)

e.accept()

The complete working drag-drop code is shown below.

from PyQt6.QtCore import QMimeData, Qt

from PyQt6.QtGui import QDrag